Kepler lifts OpenAI’s internal data intelligence

OpenAI has introduced an internal data agent known as Kepler, designed to let employees interrogate one of the largest private data estates in the technology sector through natural-language queries. The system, powered by a GPT-5.2 class model, is built to handle more than 600 petabytes of internal information, a scale that reflects both the company’s rapid growth and the expanding complexity of training, safety, product telemetry and […] The article Kepler lifts OpenAI’s internal data intelligence appeared first on Arabian Post.

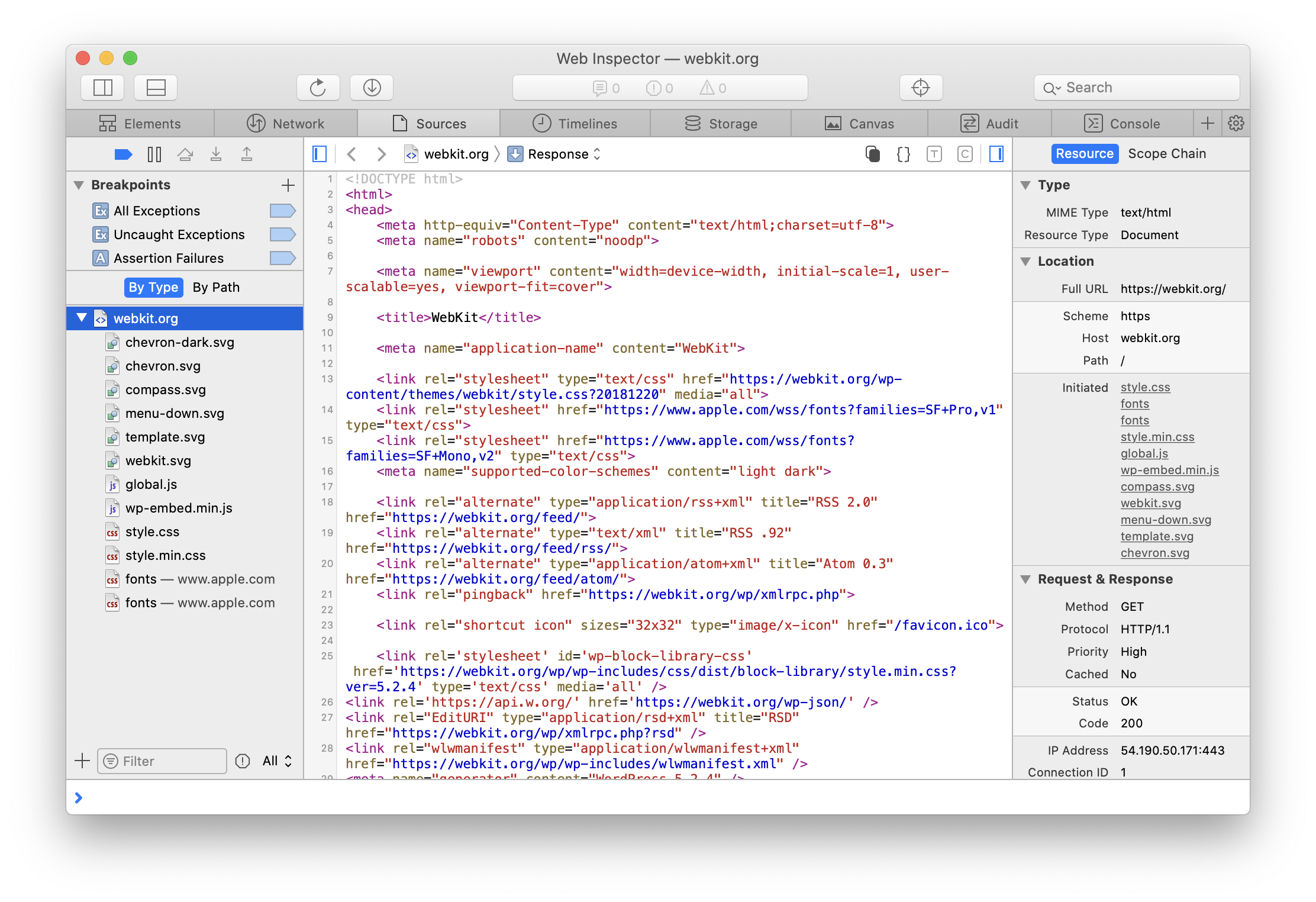

OpenAI has introduced an internal data agent known as Kepler, designed to let employees interrogate one of the largest private data estates in the technology sector through natural-language queries. The system, powered by a GPT-5.2 class model, is built to handle more than 600 petabytes of internal information, a scale that reflects both the company’s rapid growth and the expanding complexity of training, safety, product telemetry and enterprise operations data.

People familiar with the rollout say Kepler is not a consumer product and is restricted to internal use, aimed at reducing the friction between staff and vast, fragmented datasets accumulated across research, infrastructure, policy and business functions. The agent allows engineers, researchers and operations teams to ask questions in plain English rather than writing bespoke queries or navigating multiple dashboards, returning structured answers, summaries and pointers to underlying records.

At the core of Kepler is a six-layer context architecture designed to manage relevance and safety while operating at extreme scale. The lowest layers focus on raw data access controls and schema awareness, ensuring that the agent understands what datasets exist and who is permitted to see them. Higher layers add semantic understanding, temporal context and task intent, allowing the system to distinguish between exploratory questions, operational checks and research-grade analysis. The final layer governs response synthesis, applying internal policy constraints and audit logging before answers are delivered.

Engineers involved in the project describe the architecture as a response to a problem faced by many large technology groups: data abundance without coherence. As internal data volumes surged into the hundreds of petabytes, traditional tools struggled to provide a unified view. Kepler’s design aims to compress that complexity into a conversational interface without flattening important distinctions between data types, confidence levels and access rights.

A notable element of the system is its use of a Model Context Protocol, which standardises how the agent exchanges information with internal tools and services. By relying on a shared protocol, Kepler can pull live context from monitoring systems, experiment logs, documentation repositories and analytics platforms while maintaining a consistent reasoning flow. This approach reduces the need for bespoke integrations and allows new data sources to be added without retraining the core model.

The deployment has implications beyond productivity gains. By logging queries and responses, Kepler generates a detailed trail of how internal data is used, offering insights into knowledge gaps, recurring questions and potential risks. Compliance and security teams can review how sensitive datasets are accessed, while leadership gains a clearer picture of which information drives decision-making across the organisation.

The project also sheds light on how advanced AI systems are being applied inside companies that build them. While public discussion often centres on consumer-facing assistants, Kepler illustrates a parallel trend: the use of large language models as internal operating layers. By embedding reasoning capabilities directly into data access, organisations can shorten feedback loops between insight and action, particularly in environments where data volume and velocity overwhelm manual analysis.

Observers note that the technical choices behind Kepler mirror patterns emerging in enterprise AI more broadly. Context layering, protocol-based tool access and strict permissioning are increasingly seen as prerequisites for deploying generative models in regulated or high-stakes settings. OpenAI’s internal experience, given its scale and scrutiny, is likely to influence how other firms architect similar systems.

The article Kepler lifts OpenAI’s internal data intelligence appeared first on Arabian Post.

What's Your Reaction?